The cronjob scripts

The "cronjobs" directory contains miscellaneous cronjob scripts used for automated periodic and scheduled maintenance. These are described below.

| Name | Description | Frequency | Default status |

|---|---|---|---|

| Cleans up shopping baskets for removed user sessions. |

Once a week. |

Enabled |

|

| Clears expired binary items from DB-based cluster installations (eZDB and eZDFS) |

Once a week. |

Enabled |

|

| Hides nodes when a specified date and time is reached. |

Not less than once a day. |

Enabled |

|

| Performs delayed search indexing of newly added content objects. |

Not less than once a day. |

Enabled |

|

| Removes old/unused drafts. |

Once a month. |

Disabled |

|

| Removes unused internal drafts. |

Once a day. |

Enabled |

|

| Synchronizes user account information with an LDAP server. |

Not less than once a day. |

Disabled |

|

| Validates published URLs. |

Once a week. |

Enabled |

|

| Sends notifications to subscribed users. |

Every 15-30 minutes. |

Enabled |

|

| Imports RSS feeds. |

Not less than once a day. |

Enabled |

|

| Garbage collect expired sessions. |

Once a day. |

Disabled |

|

| Removes expired cache blocks with the "subtree_expiry" parameter. |

Not less than once a day. |

Enabled |

|

| Cleans the static cache. |

Once a week. |

Disabled |

|

| Purges trashed content objects. |

Once a month. |

Disabled |

|

| Unlocks locked content objects. |

Every 15-30 minutes. |

Disabled |

|

| Removes content objects when a specified date and time is reached. |

Not less than once a day. |

Enabled |

|

| Updates the page view statistics by parsing the Apache log files. |

Not less than once a day. |

Disabled |

|

| Processes the work-flows. |

Every 15-30 minutes. |

Enabled |

Cleaning up expired data for webshop

The eZ Publish webshop functionality allows your customers to put products into their shopping baskets. The items in the basket can then be purchased by initiating the checkout process. The system stores information about a user's shopping basket in a database table called "ezbasket". Information about sessions is stored in the "ezsession" table.

If a user adds products to his basket and then stops shopping (for example closes the browser window) without initiating the checkout process, the session of that user will expire after a while. Expired sessions can be removed either automatically by eZ Publish or manually by the site administrator. When a user's session is removed from the database, the system will not take care of the shopping basket that was created during this session. In other words, the system will remove an entry from the "ezsession" table, but the corresponding entry in the "ezbasket" table (if any) will remain untouched. This behavior is controlled by the "BasketCleanup" setting located in the "[Session]" section of the "site.ini" configuration file (or its override). If it is set to "cronjob" (default), you will have to remove unused baskets periodically by running the "basket_cleanup.php" cronjob script.

Please note that removing unused baskets usually takes a lot of time on sites with many visitors. It is recommended to run the basket cleanup cronjob once a week. If you wish to run it together with other (more frequent) cronjobs, use the "BasketCleanupAverageFrequency" setting located at the same place to specify how often the baskets will actually be cleaned up when the "basket_cleanup.php" cronjob is executed. If you wish to run "basket_cleanup.php" separately from other cronjobs, add the following line to the "[Session]" section of your "cronjob.ini.append.php" file:

BasketCleanupAverageFrequency=1

Please note that there is no need to run this cronjob if your site does not use the webshop functionality (or if it does but the "BasketCleanup" setting is set to "pageload").

Hiding nodes at specific times

The system can automatically hide a node when a specified date is reached. For example, you may wish that an article published on your site should become invisible within a couple of days/weeks/months. However, you are not interested in removing the article, you just want to hide it. In this case, you will have to add a new attribute using the "Date and time" datatype to your article class and configure the hide cronjob. The following text describes how this can be done.

Adding an attribute to the "Article" class

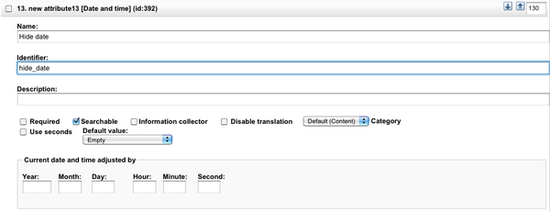

Go to "Setup - Classes" in the administration interface and select the "Content" class group to view the list of classes assigned to this group. Find the article class and click it's corresponding edit icon/button. You will be taken to the class edit interface. Select the "Date and time" datatype from the drop-down list located in the bottom, click the "Add attribute" button and edit the newly added attribute as shown in the following screenshot.

Note that both the name and the identifier can be set to anything (you specify what to use in the ini file - see further below) and click "OK". The system will add a new field called "Hide date" (the name of the newly added attribute in the example above) to the class and thus it will appear in the edit interface for the objects (in this case articles). When the articles are edited, this field can be used to specify when the cronjob should hide the nodes. If the attribute is left blank, the article will not be affected by the hide cronjob. Please note that the "hide.php" cronjob must be run periodically for this to work.

Configuring the "hide" cronjob

Add the following lines to your "content.ini.append.php" configuration file:

[HideSettings] RootNodeList[]=2 HideDateAttributeList[article]=hide_date

You should specify the identifier of the newly added attribute in the "HideDateAttributeList" configuration array using the class identifier as a key. In addition, you need to specify the ID number of the parent node for the articles using the "RootNodeList" configuration directive.

Delayed search indexing

If the delayed indexing feature is enabled, newly and re-published objects will not be indexed immediately. In other words, eZ Publish will not index the content during the publishing process. Instead, the indexing cronjob will take care of this in the background and thus publishing will go a bit faster (since you don't have to wait for the content to be indexed). In order for this to work, the "indexcontent.php" cronjob must be executed periodically in the background (otherwise the content will be published but not indexed).

Please note that you do not need to run this script unless you have "DelayedIndexing=enabled" in the "[SearchSettings]" section of the "site.ini" configuration file (or an override).

Cleaning up old/unneeded drafts

Regular drafts (status "0")

The purpose of the "old_drafts_cleanup.php" cronjob script is to remove old drafts from the database. If enabled, this script will remove drafts that have been in the system for over 90 days. To set the number of days, hours, minutes and seconds before a draft is considered old and can be removed, specify the desired values in the "DraftsDuration[]" configuration array located in the "[VersionManagement]" block of an override for "content.ini". The maximal number of drafts to remove at one call of the script (100 by default) is controlled by the "DraftsCleanUpLimit" setting located at the same place.

Internal/untouched drafts (status "5")

The purpose of the "internal_drafts_cleanup.php" cronjob script is to remove drafts that probably will never be published. If a version of a content object is created but not modified (for example, if someone clicked an "Add comments" button but didn't actually post anything), the status of the version will be "5". The "internal_drafts_cleanup.php" script will remove status "5" drafts that have been in the system for over 24 hours (1 day). To set the number of days, hours, minutes and seconds before an internal draft is considered old and can be removed, specify the desired values in the "InternalDraftsDuration[]" configuration array located in the "[VersionManagement]" block of an override for "content.ini". The maximal number of internal drafts to remove at one call of the script (100 by default) is controlled by the "InternalDraftsCleanUpLimit" setting located at the same place.

Synchronizing user data with LDAP server

If the users are authenticated through an LDAP server, eZ Publish will fetch user account information from the external source and store it in the database. What happens is that it creates local accounts when the users are logging in.

The "ldapusermanage.php" cronjob script can be used to synchronize local user account information with the external source in the background. It is recommended to run this script periodically when the site is connected to an LDAP server. The script will take care of typical maintenance tasks. For example, if a user is deleted from the LDAP directory, it will disable (but not remove) the local account.

Please note that the script will only update the eZ Publish database. Modification of external data (stored on an LDAP server) is not supported.

Checking URLs

In eZ Publish, every address that is input as a link into an attribute using the "XML block" or the "URL" datatype is stored in the URL table and thus the published URLs can be inspected and edited without having to interact with the content objects. This means that you don't have to edit and re-publish your content if you just want to change/update a link.

The URL table contains all the necessary information about each address including its status (valid or invalid) and the time it was last checked (when the system attempted to validate the URL). By default, all URLs are valid. The "linkcheck.php" cronjob script is intended to check all the addresses stored in the URL table by accessing the links one by one. If a broken link is found, its status will be set to "invalid". The last checked field will always be updated.

You will have to specify your site URLs using the "SiteURL" configuration directive located in the "[linkCheckSettings]" section of the "cronjob.ini.append.php" file. This will make sure that the "linkcheck.php" cronjob handles relative URLs (internal links) properly.

Please note that the link check script must be able to contact the outside world through port 80. In other words, the firewall must be opened for outgoing HTTP traffic from the web server that is running eZ Publish. From 3.9, it is possible to fetch data using a HTTP proxy specified in the "[ProxySettings]" section of "site.ini" (requires CURL support in PHP).

The following protocols are currently supported:

- http

- ftp

- file

- mailto

This means that all URLs that use the protocols present in the list above are eligible for being checked. For http, ftp and file the script will try to reach the target, to make sure it is valid. For mailto protocol it will check if the DNS of the domain containing the target address is valid.

Sending notifications

eZ Publish has a built-in notification system that allows users to receive information about miscellaneous happenings. It is possible to be notified by email when objects are updated or published, when work-flows are executed and so on. If you are going to use notifications on your site, you will have to run the "notification.php" cronjob script periodically. It will take care of sending notifications to subscribed users (this is done by launching the main notification processing script "kernel/classes/notification/eznotificationeventfilter.php").

If you are using the notification system, it is recommended to run this cronjob script every 15-30 minutes.

RSS import

The RSS import functionality makes it possible to receive feeds from various sites, for example, the latest community news from www.ez.no ( http://ez.no/rss/feed/communitynews ). You will have to configure this using the "Setup - RSS" part of the administration interface and run the "rssimport.php" cronjob periodically. This script will get new items for all your active RSS imports and publish them on your site (if an item already exists, it will be skipped).

Please note that the RSS import script must be able to contact the outside world through port 80. In other words, the firewall must be opened for outgoing HTTP traffic from the web server that is running eZ Publish. From 3.9, it is possible to fetch data using a HTTP proxy specified in the "[ProxySettings]" section of "site.ini" (requires CURL support in PHP).

Garbage collect expired sessions

This Cronjob garbage collects expired sessions as defined by the SessionTimeout setting. The expiry time is calculated when session is created or updated. Normally, expired sessions are removed automatically by the session gc in php, but on some linux distroes based on debian this does not work because the custom way session gc is handled. For those exceptions this cronjob script can take care of the expired sessions.

Clearing expired template block caches

If you are using the "cache-block" template function with the "subtree_expiry" parameter to cache the contents of a template block, this cache block will only expire if an object is published below the given subtree (instead of the entire content node tree). The "DelayedCacheBlockCleanup" setting located in the "[TemplateSettings]" section of the "site.ini" configuration file controls whether expired cache blocks with the "subtree_expiry" parameter will be removed immediately or not. If this setting is enabled, the expired cache blocks must be removed manually or using the "subtreeexpirycleanup.php" cronjob script.

Clearing the static cache

Static cache is a feature that can drastically improve the performance, since the caches will be generated in plain html. Since the content will become static due to the existing cache the staticcache_cleanup.php cronjob script will be needed to force the cache to be regenerated.

This script only removes the entries from the database, so, if you require deleting the cache files this will need to be done manually. The static cache files can be found on the path defined on the StaticStorageDir setting from your staticcache.ini.append.php file.

Emptying the trash bin

The trashpurge.php cronjob script has the simple purpose of removing permanently all the trashed content in your trash bin. This allows you to trigger this action on a specific time basis, without having to execute a script manually of making the removal from your administration backend. This will remove both files and entries in the database.

Removing lock from locked files

This cronjob script removes the lock from content objects with status locked, making them available.

For more details about locks on content objects please read the User defined Object States chapter.

Removing objects at specific times

The "unpublish.php" script makes it possible to remove content objects when a specified date is reached. For example, you may wish to delete some articles (move them to the trash) within a couple of days/weeks/months. The following list reveals how this can be done.

- Add a new attribute of the "Date and time" datatype to your article class using the "Setup - Classes" part of the administration interface. Specify "unpublish_date" as the attribute's identifier; a new field will become available when the objects (in this case articles) are edited. This field can be used to specify when the cronjob should remove the object. If the attribute is left blank, the object will not be affected by the unpublish cronjob.

- Configure the "unpublish.php" cronjob by adding the following lines to your "content.ini.append.php" configuration file:

[UnpublishSettings] RootNodeList[]=2 ClassList[]=2

You should specify the ID number of your article class in the "ClassList" configuration array and put the ID number of the parent node for your articles to the "RootNodeList" setting.

Analyzing the Apache log files

It is possible to have the view statistics for your site pages stored in the eZ Publish database. To do this, you will have to run the "updateviewcount.php" cronjob script periodically. The script will update the view counters of the nodes by analyzing the Apache log file (the view counters are stored in a database table called "ezview_counter"). When executed, the script will update a log file called "updateview.log" located in the "var/example/log/" directory (where "example" is usually the name of the siteaccess that is being used - it is set by the "VarDir" directive in "site.ini" or an override). This file contains information about which line in the Apache log file the script should start from the next time it is run.

You will also have to create an override for the "logfile.ini" configuration file and add the following lines there:

[AccessLogFileSettings] StorageDir=/var/log/httpd/ LogFileName=access_log SitePrefix[]=example SitePrefix[]=example_admin

Replace "/var/log/httpd" with the full path of the directory where the Apache log file is stored, specify the actual name of this file instead of "access_log", replace "example" and "example_admin" with the names of your siteaccesses (if you have more than two siteaccesses, list all of them).

Once the correct settings are specified and the "updateviewcount.php" cronjob script is run periodically, you will be able to fetch the most popular (most viewed) nodes using the "view_top_list" template fetch function and/or check how many times a node has been viewed (as described in this example).

Processing workflows

In order to use workflows, you will have to run the "workflow.php" cronjob script periodically. The script will take care of processing the workflows. For example, let's say you are using the collaboration system and all the changes made in the "Standard" section can not be published without your approval. (This can be done by creating a new "Approve" event within a new workflow initiated by the "content-publish-before" trigger function.) If somebody (except the administrator) changes article "A", the system will generate a new collaboration message "article A awaits your approval" for you and another collaboration message "article A awaits approval by editor" for the user who changed it. (Run "notification.php" periodically in order to make it possible for users to be notified by E-mail about new collaboration messages.) You will be able to view your collaboration messages and review/approve/reject the changes using the "My Account - Collaboration" part of the administration interface. However, the changes will not be applied to article A immediately after getting your approval. This will be done next time the "workflow.php" cronjob script is executed.

Clearing expired binary items from DB-based cluster installations (eZDB and eZDFS)

This is done by means of a cronjob and should be used for regular maintenance. The necessary cronjob is located here:

cronjobs/clusterbinarypurge.php

This cronjob is by default part of a cronjob named cluster_maintenance. Run it with the following script from the root of the eZ Publish directory:

$php runcronjobs.php cluster_maintenance -s ezwebin_site

Note that "$php" should be replaced by the path to your php executable. Also how to run a script can differ depending on your system. Please consult your systems manual for more information.

It is recommended to run this cronjob weekly, although it can be done more frequently for websites where binary files are removed frequently.

For more information please visit our Issue Tracker: issue 015793.

Svitlana Shatokhina (14/09/2010 12:12 pm)

Ricardo Correia (09/12/2013 11:38 am)

Comments

Manual link validation

Thursday 11 January 2007 3:21:18 pm

Mauro

use of the SitePrefix INI setting

Monday 12 February 2007 2:48:56 pm

Svitlana Shatokhina

See http://issues.ez.no/9559 for more information.

EZ FLOW Cronjob

Friday 14 November 2008 10:01:48 pm

Ludmillia

Taking care of updateviewcount needed file in a cronjob log strategy

Monday 01 February 2010 5:01:38 pm

Ronan

and if /var/log/httpd/mywebsite.log is missing,

and if you log your cronjobs using php runcronjob.php ... >> mycronjobs.log

update view count script will raise a warning message in your mycronjobs.log file, as it'll try to fetch the missing /var/log/httpd/mywebsite.log file